I’ve written about this before, but it’s worth remembering that almost nothing in sociotechnical systems is guaranteed to remain stable for very long. We’ve recently had two great examples of this, with the first being the changes to Twitter, and the second being ChatGPT (and, by extension, the new Bing).

In the first case, a platform which had long seemed relatively static, (especially compared to all the rest), rather suddenly changed hands, which led to major changes in what it delivered. On some level, many of the technical changes to the actual functionality of the site were relatively minor. Much bigger, however, was the impact of many people abandoning the platform for alternatives like Mastodon. Although it seems to me like people have gradually been filtering back to Twitter, most people seem to have the sense that the experience has changed. Obviously more dramatic infrastructural changes, like prioritizing tweets from paying users, could produce even more dramatic shifts. Regardless, it’s a good reminder that what we think of as “Twitter” is the product of a combination of people and software, either or both of which can shift dramatically in a short period of time.

ChatGPT, by contrast, has clearly made a much bigger splash, leading not just in terms of a deluge of news coverage, but various rather sudden responses from many quarters, most notably Microsoft’s limited rollout of an update to their search engine, Bing, which incorporates a GPT-based system (with Google promising to do something similar relatively soon). The failures of that move have already been widely discussed, including making false claims about recent events, challenging users with a combative tone, and commenting on the physical appearance of researchers. What interests me here, however, is the broader claim that ChatGPT will massively disrupt traditional web search as we know it.

Although this suggestion may end up coming to pass in some form, I want to question the idea along two dimensions. The first is to question whether a chatbot-like system would ever “replace” traditional search, and the second is asking more fundamentally about the very idea of using a chat interface for information retrieval.

Part of this comes down to what search is for, and a bit of history is useful here. In the earliest days of the internet, there was no obvious way to find what existed. One could try random IP addresses, but this was largely a hopeless exercise. Quite quickly, various types of directories began appearing, where website would manually curate lists of websites on various topics, such as Tim Berners-Lee’s Virtual Library. These directories remained popular for a surprisingly long time before they were largely killed by search engines. Several attempts at search came along before this of course, though difficulties relating to both computation and data made it a difficult problem. As is widely known, it was Google’s use of particular algorithm – PageRank, in which a website’s ranking is recursively based on the ranking of those website which link to it – that led (at least in part) to the victory of that particular website over all others.

Although ChatGPT has clearly captured the public’s imagination and interest in a way that has few precedents, it remains somewhat unclear what it is good for. Despite talk of it replacing search, it is fairly clear that it is specifically useless for various types of retrieval tasks. Not only is it enduringly out of date (sometimes I just want to find a particular tweet I saw two days ago, rather than retrieve facts from history), it also directly refuses to provide certain types of information, not to mention its lack of sourcing and general unreliability.

A separate issue is the design and affordances of a chat-based system. I have long thought that chatbots have been vastly over-hyped, in large part because chat interfaces for information retrieval seem inherently self-defeating. At least until recently, there were basically two modes that made sense to think of when considering chatbots. The first was, for lack of a better term, entertainment. There are all kinds of ways we might interact with a system where we are not looking for specific information, but rather just having fun. In this context, a chat interface may well make a great deal of sense, but most existing systems were, until very recently, generally too incompetent to provide much reward here.

The second mode is information seeking. Here various companies had been betting heavily on chat being the killer interface, but this never made any sense to me. If I want information, I don’t necessarily want to be coaxed into having a conversation; I just want to get the information as easily as possible. Although a chat interface may suggest a certain style of interaction, it is really just another interface to be manipulated or hacked. As such, I will experiment and discover whatever is the most efficient way of getting information, without necessarily conforming to the intended format.1

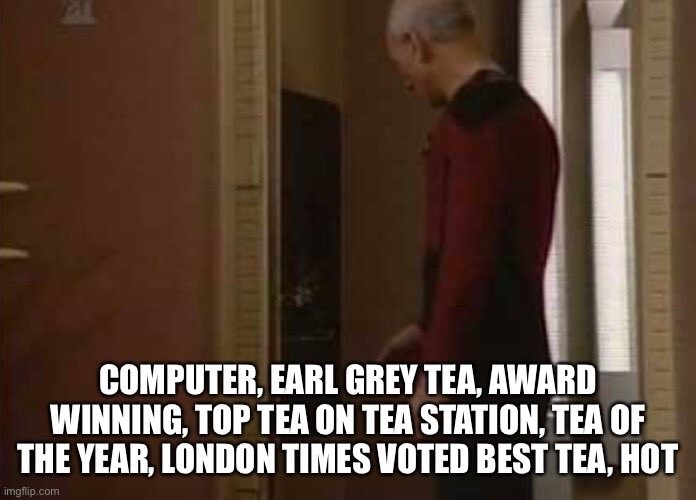

Interestingly, we are already seeing extensive examples of the latter type of loose “hacking” taking place, especially in people’s attempts to use various promoting strategies to get LLM-based systems to break out their “safe” modes, or “reveal” their underlying configuration.2 As with all great internet trends, things have been quickly memeified, with my favourite being the image below (which I encountered on Mastodon, with the the poster noting they had taken it from a private discord).

People are well aware that you can get vastly different answers from ChatGPT depending on how you phrase your input, and there is a rapidly growing folk knowledge of how to get certain types of response, including asking it to answer in the voice of a particular person. Frankly, ChatGPT is just fun in a way that previous chat systems have not been. People are excited in part because it is a whole new world to discover. Because it is so multifaceted, there is an endless world to probe, much like wandering through Wikipiedian rabbit holes. For a certain type of person, this is catnip. However, much of this remains fundamentally much closer to entertainment than retrieval.

Why, then, have we seen such extensive deployments of chat-style interfaces in domains like customer service? I assume part of this must be hype and miscalculation, but there is also likely an element of producing desired user behaviour, perhaps even something so basic as users looking for a refund may give up sooner when they’re dealing with a chatbot than a person. Given the inability of most systems to handle anything outside the ordinary, it seems intuitively obvious that a well-structured menu of options is far preferable to a system which pretends it can carry out a conversation, though it’s entirely possible that certain types of people have different preferences here.

In any event, the idea that one type of system will simply replace another places far too much faith in the idea that everything else will remain stable. Even as these debates are playing out, the nature of text on the internet is quickly changing. The fact that these systems are so capable means that more and more text is being produced that is not written by humans, which is quickly becoming integrated into the web, upon which most of these systems are based.

Indeed, it could be that the past couple of decades will end up being a kind of golden age for understanding human linguistic production. Before then, there was nothing comparable in terms of large scale digital text. From this point onwards, it will become increasingly hard to know the source of various bits of text, limiting our ability to make inferences about people based on it.

Clearly we will see all manner of experiments with new ways of doing search, including conversational interfaces, but it is far from a sure thing that people will prefer this, especially for certain types of tasks. Rather, to the extent that these systems are truly impressive, we should expect things to change in a much more fundamental way.

In particular, why are you even searching for that thing you are searching for (or asking ChatGPT about) in the first place? Are you reading up on some obscure topic from personal interest? Great, a chatbot-style system might be a wonderful accompaniment to wikipedia, books, games, and other forms of infotainment. By contrast, are you seeking information for some instrumental purpose? If so, how much longer will that purpose be relevant? What is the point of looking up the spelling of words, when we have such excellent spell checkers? What is the point of writing an email if it will be summarized and acted on by a machine learning model at the other end?

In the case of search, it is entirely possible that parts of what we currently use search for will end up in a different domain, potentially even something like having an open ended dialogue which provides a combination of entertainment, advertising, and nudges. It may also become less and less relevant to need to find some specific piece of information (to the extent that such things can even be discretized). But wherever I am trying to find some specific document that exists somewhere on the internet (whether I know what I’m looking for or not), there is no reason that I should be prefer to be coerced into interacting in the form of a conversation. Rather, we will continue to hack and learn to exploit these interfaces, just as we do with all the rest.

The one thing you can guarantee for a demo of any NLP project is that people will immediately try to see if they can break it, by defying the intentions or expectations of the creator. ↩︎

I put these terms in quotes, since it’s not 100% clear that any of these things are happening exactly as people think they are. ↩︎