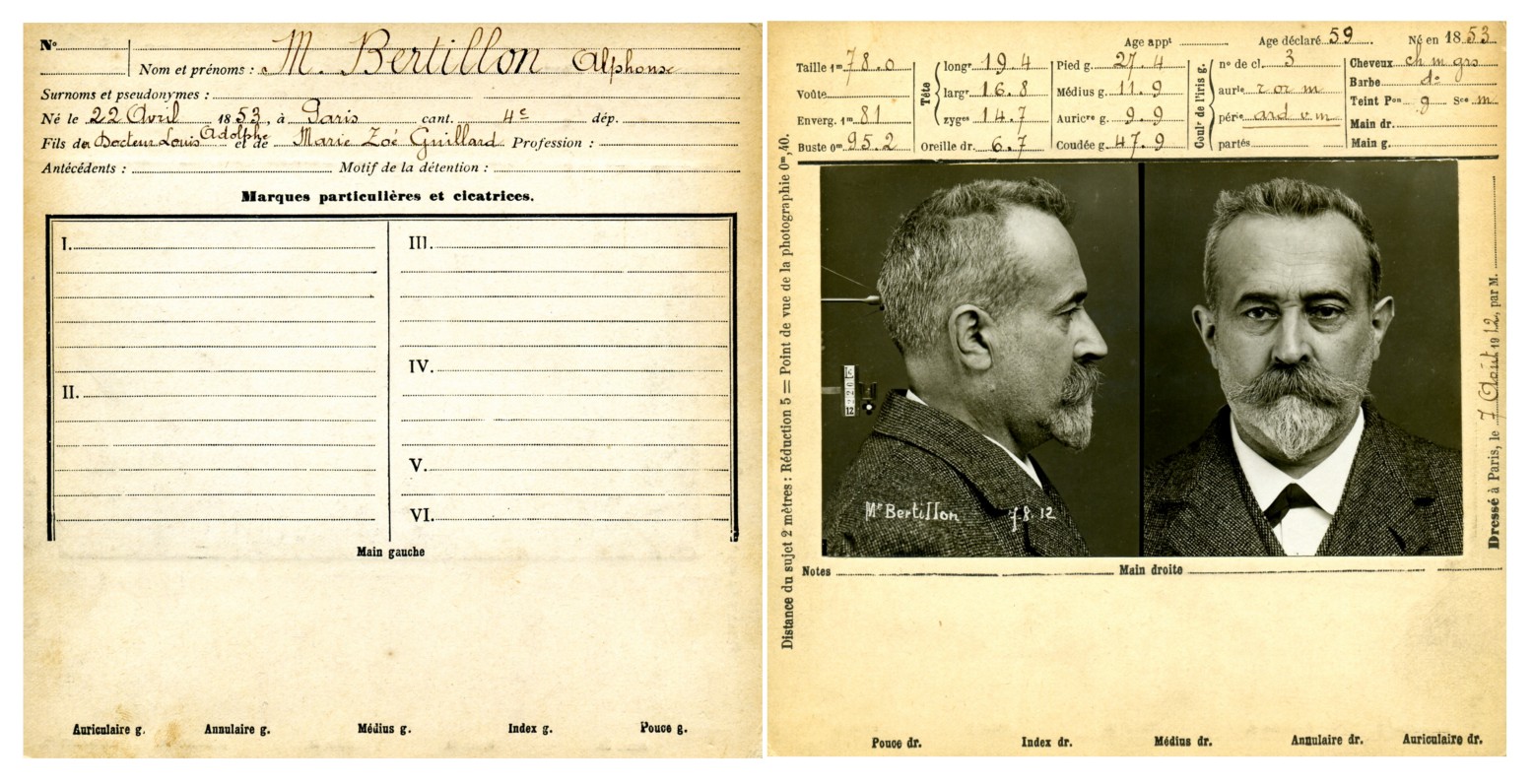

Anthropometric data sheet (both sides) of Alphonse Bertillon (1853–1914).

For anyone who has been paying attention, it will not have gone unnoticed that the past year has seen a dramatic expansion in the use of face recognition technology, including at schools, border crossing, and interactions with the police. Most recently, Delta announced that some passengers in Atlanta will be able to check in and go through security using only their face as identification. Most news coverage of this announcement emphasized the supposed convenience, efficiency, and technical novelty, while underplaying any potential hazards. In fact, however, the combination of widely available images, the ability to build on existing infrastructure, and a legal landscape that places very few restrictions on recording, means that face recognition represents a unique threat to privacy that should concern us greatly.

The origin of an idea

At its core, the task of face recognition is essentially what is referred to as a classification problem. That is, given an image of someone, return a yes or no decision as to whether that image matches an existing record. We rarely stop to think how remarkable it is that we as humans are able to do this so easily. Unfortunately, we are also unable to explain exactly how we do it, or to break the process down into steps which could be programmed into a computer. As such, automated face recognition is made possible by discovering statistical patterns in large datasets using machine learning. In particular, modern “deep learning” systems work by learning a series of mathematical transformations which convert the input image and existing record into a single number—the probability (according to the computer) that they are a match.

The idea of using photographs to identify people has a long history, going back to the very beginnings of photography. Photographs were famously used by police to help identify Communards after crushing the the Paris Commune in 1871, but the systematic use of photography for this purpose really began with the “Bertillonage” system developed by Alphonse Bertillon in 1879. His major insight was that photographs by themselves were effectively useless for identifying unknown individuals, unless one could first narrow down the set of possibilities.

To solve this problem, Bertillon proposed taking a series of eleven measurements from anyone who was arrested, such as the width of the head and the height of the right ear. These measurements (each quantized as small, typical, or large) together formed a kind of code which could be used as an index into a card file, effectively pre-filtering the set of possible matches, using a technique that we now refer to as “hashing”.

The cards themselves included the person’s name, a standardized pair of photographs (frontal and profile views of the face), and other identifying marks, such as scars and tattoos, thus allowing anyone who had previously been arrested to be positively identified. In the modern re-imagining of this system, our face has become the index, and the record it retrieves now contains vastly more data on us than could have been imagined by anyone in the early years of criminology.

How is it that we are now able to automatically do what Bertillon could not? Part of the reason is that computers have made it possible to efficiently store and inspect enormous numbers of records. More importantly, however, machine learning has allowed us to develop software that is able to generalize (to some extent) beyond what it has previously encountered. Factors such as changes in lighting or expression can make a vast difference in the actual pixel content of an image. However, by learning from millions of photographs, machine learning systems are often able to differentiate what is the “signal” in the image—in this case, the face to be recognized—from the “noise”—the varying lighting conditions or the angle of the face.

On some level, this particular application of machine learning seems to sidestep some of the common concerns about algorithmic fairness that are being widely discussed today. Unlike, for example, the problem of predicting whether or not someone will commit another crime, identifying someone from a photograph requires no speculation about future behavior or underlying traits. Either the photograph is a picture of you, or it is not, and this does not require the construction of problematic categories, such as “likely to recidivate”. The fact is, however, that widespread face recognition, whether controlled by government agencies or corporations, has the potential to lead to a massive loss of privacy, an increased likelihood of a severe data breach, and a worsening of existing inequalities.

The state of the art

As advocates are keen to point out, face recognition is in many ways similar to existing methods of biometric identification, such as fingerprints and iris scans. There are, however, several critical differences. First, face recognition is minimally invasive, and can work without requiring the subject to willingly allow themselves to be scanned. The face provides a large enough target to be recognized from across the street, yet is distinct enough between individuals to use as the basis for identification. In many contexts, of course, we may not even be aware that we are under surveillance and being classified.

Second, face recognition can build on existing infrastructure in a way that other methods cannot. Biometric signals such as iris scans and fingerprints typically require specialized hardware to collect. With face recognition, by contrast, it may be possible to identify people in footage taken from standard equipment, such as CCTV cameras. Although the technology will be more accurate on images acquired under controlled conditions, the results from even poor quality images might provide enough of a narrowing of possibilities to prove useful, much like Bertillon’s eleven measurements. Especially in urban areas, surveillance cameras have become so common that we mostly no longer question their presence. As such, this technology could potentially be deployed widely almost overnight with minimal warning or cost.

Third, and perhaps most importantly, unlike fingerprints or iris scans, where an authenticated reference image must be specially acquired, the data required to train a face recognition system is already widely available. For the former technologies, individuals typically provide the information voluntarily (as in trusted traveller programs, such as US Global Entry), or are compelled to provide it (after being arrested, for example). For face recognition, by contrast, most of us have already unthinkingly distributed our reference data across the internet, often in a form that is directly attached to our personally identifying information.

Even for those who are more circumspect about using social media, we are very likely in a government database of faces from when we applied for a driver’s license, which at least 26 states allow law enforcement to search against, according to the Georgetown Center on Privacy & Technology. These sorts of images, especially the numerous photographs on social media, provide the ideal training data for machine learning systems.

Face recognition will always work best when attempting to verify the identity of a known person, but it can also be used to attempt to match an unknown individual against a large database of possibilities. The ability to identify someone in this manner clearly depends on whether or not the person is actually in the database. Less obviously, as the number of people in the database increases, the probability of mistakes will also increase—either false negatives, where the true match is not recognized, or false positives, where the person in the image is misidentified as someone else—with an inherent trade off between these two.

Manufacturers currently claim quite high levels of accuracy for their systems, but there are many reasons why such numbers are probably not credible. Success will vary greatly, for example, with the number of people in the database, the quality of the source and reference images, the conditions under which they were acquired, and so on. Moreover, as has been widely documented, most systems exhibit serious biases, such that they are most accurate when attempting to identify white men, and less accurate on other people. While the idea of being harder to identify might at first seem like an advantage from the perspective of privacy, it is important to remember that lower accuracy will translate into both false negatives and false positives. As such, current systems are very likely to generate more misidentifications for women and people of color.

Furthermore, technologies like face recognition can still pose a threat even if their accuracy is much lower than is claimed. Given how policing technologies have been used in the past, there is every reason to think that we will see such systems being deployed as a mechanism for choosing whom to detain and interrogate. Just as the New York City police department relied heavily on the ambiguous “furtive movements” category as a justification for stop and frisk actions (which involved serious racial disparities in those selected), face recognition could theoretically be used in a similar way—to allow certain institutions to justify the behavior they want to justify. In other words, depending on your purpose, poor accuracy may be more of a feature than a bug.

Personalized surveillance

Much of the concern about face recognition that has already been raised has focused on potential mis-use by the state. Governments, after all, have everything they need to deploy widespread face recognition as an operational system—detailed information about all citizens, existing infrastructure which can be re-purposed, and the ability to craft the legal landscape as necessary. Indeed, it is not hard to imagine a gradual slide towards the situation that currently exists in China, where an omnipresent network of cameras and face recognition is used for social control, even at the level of identifying pedestrians who cross the street illegally.

Although there is clearly a need for regulating the government use of this technology, it is also critical that we think about its use by the private sector. If anything, commercial applications are likely to be the “friendly face” of face recognition. That is, we will encounter ways of using the technology that will be hard to resist, and which will gradually normalize its widespread use. We have already seen several examples of this, such as the ability to unlock your iPhone with your face, the automatic tagging of friends in photographs on Facebook, and the Amazon Go store, which automatically charges people for whatever they take off the shelf, without needing cashiers.

While there is little doubt that this technology has the potential to improve our lives, including in ways we haven’t even thought of yet, there are also numerous ways for it to go wrong. In particular, given that personal data has become such an important resource in the modern economy, there will inevitably be a drive for companies to use it to collect more data about us, and to connect our online identity to our real-world activities.

To some, this may seem like a moot point, given that we already willingly provide so much of our personal information to existing corporations, including our real-time location, moment by moment, via our smartphones. The critical difference, however, is that we have at least in principle entered into an agreement with these companies which specifies what they can and cannot do with that data. Moreover, because their business model depends on us continuing to provide them with our information, they are incentivized to at least try to maintain our trust, though they often fail. Companies who acquire our data without our consent, on the other hand, have little incentive to adequately protect that data, and typically provide no way of opting out.

The credit scoring industry provides a perfect illustration of this. As Josh Lauer documents in his book, Creditworthy, the personal credit scoring industry began well over a century ago, initially gleaning whatever information it could about individuals from newspaper gossip columns and personal reports, eventually expanding to create huge databases with information on virtually everyone. The threat created by this approach was revealed dramatically in 2017, when Equifax announced that their system had been compromised, and that the personal information of hundreds of millions of people had been exposed, a breach for which the company has faced no real consequences.

Given that we have effectively no legal right to privacy while in public (except in very limited jurisdictions), there is little to stop the credit industry or others from setting up what would amount to a private surveillance network, which could be used to track the movements of millions of people in real time. While this might seem far fetched, it is technically feasible, legally permissible, and perhaps commercially viable. Indeed, a somewhat lo-fi version of this already exists, in the form of automated license plate readers, which are being used by companies to record and track the location of cars over time.

Once companies enter into the business of using face recognition to track individuals, as they almost certainly will, the risk of a major data breach of highly personal information will become ever more likely and consequential. Without further regulation, the companies that scrape and sell comprehensive profiles of individuals will have every incentive to use this technology, and very little motivation to ensure that it is used responsibly.

The false promise of security

Despite the concerns raised above, many people will still feel that widespread face recognition would be a net positive, because of the perceived benefit in terms of reducing crime or increasing public safety. Although there undoubtedly have been some benefits from more widely distributed surveillance, it is worth noting that face recognition in particular may prove to be relatively easy, if awkward, to fool. As we are increasingly realizing, virtually all deep learning systems are highly vulnerable to so-called “adversarial examples”. That is, it is possible to fool such systems by making subtle modifications to the input, often in ways that are imperceptible to humans.

Although this is easiest to demonstrate by modifying images directly, people have also managed to confuse image recognition systems (such as those used by self-driving cars) by, for example, putting stickers on stop signs so that they will no longer be recognized as such. In the case of face recognition, the equivalent might be applying a pattern to the face in the form of makeup, or some other decoration, as proposed by artist Adam Harvey and others.

Developing defenses against adversarial examples is an active area of research, but thus far, it seems as though this will be an ongoing arms race where attackers are likely to maintain an advantage. Of course, even if this were not the case, more low-tech options are likely to remain effective. In the most extreme case, one could imagine participating in a “security through obscurity” approach, where people take turns wearing masks designed to look like other people, in an attempt to confuse surveillance systems on a large scale.

Preserving our privacy will always remain possible, but it seems likely to come with ever increasing costs, most of which will be borne by those with the least power. While we can imagine a dystopian future in which designer masks become the latest fashion trend, it would be highly preferable to use standard channels of regulation to place reasonable limits on such technologies, as has been called for by many entities, including Microsoft and the ACLU. Face recognition technology will inevitably continue to improve, but it is up to us to decide how it will be used.